Language

November 20, 2011 § Leave a comment

It is not just the relation between brain matter and thinking,

for which language seems to play a salient role. It is more appropriate to conceive language as the most important ingredient for any aspect of the “relationability” of humans, our ability to build and maintain relations to any aspect of the world. Yet, it is not the only important one.

Quite obviously, it would be seriously presumptuous to try to develop a theory (just another one…) about language. So, we take an easy first step in declaring that we follow largely the Wittgensteinian view about language. Still, there are many aspects that we have to derive in other chapters, such as associativity, modeling, the status of symbols in the brain and in thinking (more general: in epistemic affairs), the famous transition from the probabilistic to the propositional, the enigma of the relation between structure and content, and so on.

Here, our interest is driven by the difference between “(Natural Language) Processing” and “Natural (Language Processing),” where we would like to move from the previous to the latter. From that we can derive two directions.

- (1) With regard to the question of the possibility of machine-based epistemology, we have to understand the role of the symbolic in thinking from the perspective of language.

- (2) We have to understand language and its role in thinking if we would like to “implement” the basic conditions for developing a capability to deal with (human) language.

One of the most puzzling issues about language—and I take these puzzles as something quite positive, if not even wonderful—is the rich set of observable phenotypes. It stretches from arrangements consisting only from a few dozens of words up to several hundreds of thousands, from designs almost free of any (linguistic) structure through pieces that are almost completely regulated. There is a large variety in the relation between rules for arrangements and how things are said. And above all, the way to handle, but also to introduce the indeterminacy (e.g. perlocutionary [1] or even “translocutionary” aspects) into the stream of utterances, signs and language tokens exhibits an extreme variety.

Yet, for all languages it holds that private languages are not possible. This is even true for programming languages, which are an extremely reduced version of a language. Wittgenstein’s private language argument reaches far beyond what could be called the necessity for commonality.

There are essentially two indeed almost explosive consequences. The first one concerns meaning: Meaning is not a mental entity [2]. Meaning does not appear just by some mental processes. Meaning can’t be the result of some computation. Hence, it is also impossible to “transfer” meaning from one entity to another.

It is therefore not quite rewarding to assume that the purpose of language is to transfer meaning unambiguously, as it is abundant in linguistics or computer sciences. But one of the most serious instances of deep nonsense is the attempt to create a database, call it ontology and then claim that the meaning of words and concepts have been captured in it. This nonsense is not just a marginalia, it is a dangerous phenomenon, because people start to believe in it (see the so-called semantic search engines like Wolfram alpha, of which they say that is based on formulas… I really could not imagine a more ugly thought…)

Yet, it still makes sense to say that we know. The private language argument, however, enforces a radical change of our conception of knowledge. Given (or, for the moment: accepting) the fact that our thinking takes place in structures like our brain (or body as a whole), the question arises what is going on there? On the level of neurons there are no signs. Mentalesic (Fodor’s hypothesis [3]), societies in mind, or of minds inside the skull (Minsky [4]) and any other concept referring to some kind of homunculus are wrong, such things can not and actually also do not exist. Equivalently, it is impossible to “program” language understanding as we program, say, a spreadsheet software.

On the other hand we know that the brain is indeed a complex system, full of emergent phenomena. Third, if we say “I know,” we clearly refer to internal and private and stateless processes taking place in our brain. We also have the experience that words are appearing in our thought, yet not in the same way as in an external text. They are always somehow fluffy, and in most speech acts, it is more a “it is speaking” than a “I am speaking”. The latter is a diagnostic statement that is always a posteriori to the utterance.

From all of these circumstantial evidences we have to conclude (and its the only possible conclusion) that (1) we have to translate our internal models (on the level of “mind matter”) into language, and (2) language itself is that tool for translation.

So our first result can be comprised in the following two statements.

- (1) Meaning is not a mental entity.

- (2) Language is the tool and the result of a mental translation process.

Everyone knows the well-founded feeling (!) of not having found the “appropriate” word. Yet, we can’t talk about that in any more detail, it is a true language feeling. The translation of a set of internal model residing somewhere in the associative matter of the brain into language is deficient, and always and necessarily so. The translation itself is some kind of modeling, of course.

This translation not only removes information it also adds a lot. Translating something into a series of words means to create a text. Our internal relationships thus are quite similar to the hermeneutic setting. Foucault was completely right to investigate the “hermeneutics of the subject” [5]. Nevertheless it would be quite inappropriate then to return to the homunculus. Human thinking thus is not comprised from some kind of author and an audience. Anyway, we can understand now that we relate to ourselves always only in a modeling relation. If it would not be wrong to say so, we could say that we have no direct access to ourselves. This “me” appears only after rather complicated modeling. There is no “me” instance somewhere in the brain or the mind or the body. It is impossible. Claiming it instead, one would give up all the insights up to the denial of the possibility of a private language. Equivalently, one would then claim that we are trivial, pre-programmed machines.

Returning to our two introductory questions from above we recognize that we have answered them both.

Our results are not only relevant for the realm of natural languages, they are also quite important for what is called “formal concept analysis.” In the same way we have to translate the almost fleshy part of our thoughts into language, we have to translate them into logics, or any other formal concept. That is, logics and formalisms as we communicate it, are epiphenomena. Analogous to Wittgenstein’s private language argument we thus can conclude that there is no private logics. It can not be programmed and there is absolutely no objectivity in it, just as little (or as much) as it is the case for language. For Wittgenstein, logics was not a starting point (which would need arbitrary axioms), it was a consequence of “using and partaking in language.” [6,7] This dependence of logics from practical living introduces a distinction, which is often overlooked. Logics is a purely transcendental entity, something that we have to “assume” like space and time. Of course, it does not make much sense to doubt on these. But logics can not be “found” in the world. It is without sense to babble about truth, or truth values. We can not talk about truth in this world as we can not talk about god being in this world. If people do instead, the usual consequence is murder, million-fold often (think about religious wars, or ideological wars or civil wars). What we instead do find in the world is a practical, negotiated, and negotiable instance of transcendental logics. It almost looks like (transcendental) logics, but is not. Any real-world logics, even in mathematics, is “contaminated” by usage, that is by some kind of reference to rather arbitrary material contexts.

These results exert some rather non-trivial consequences for epistemology, of course. First, we have to understand that we can not “transfer” knowledge on the symbolic level, neither as a sequence of symbols or signs, nor as a series of commands. The whole bag of rule-following in social contexts is implied. This can be related to the famous Grey Parrot “Alex” who has been “educated” by Irene Pepperberg [8].

Second, the role of the “me” in sentences like “I know” or “I am thinking” has to be re-evaluated. There might be some rare situations where this indeed happens, but in most situations it is not appropriate to assign too much weight to such statements. We actually are entitled to ask “Where does thinking take place?” since the answer is not anymore “in the brain” or “in the mind”. The brain and its minds are hosting processes that are private, yes, but this privacy is that of a liver working in the belly of the body. It is not relevant for the issue of epistemology. If three (or 2,4,12,25,…) humans are talking to each other, we can say, much as a programmer does, that the thoughts are running as a kind of distributed process. It does not make any sense to ask where in a self-organizing map the processing of a particular item took place, even if we can find that item at a definite (virtual) location in the SOM (or in brain matter) after processing. Yet, that definiteness appears only after the processing, if at all.

This triggers a further, quite different perspective. Quite obviously, thinking is a strongly deterritorialized phenomenon. It is almost free of sense to try to locate thinking. It is not only useless, it is even wrong to claim that thinking takes place in the brain. The whole area of the so-called analytic philosophy of mind (e.g. Ansgar Beckermann [9]) is devoid of sense.

This deterritorialization of thought links us directly to Deleuze [10], his concept of “images of thought” [11], the differential and his notions of immanence and virtuality. All these insights would not be applicable if we would drop, even implicitly or as a matter of fact, the impossibility of private languages. Saying so, it is also clear that we do not agree in the claim that language is a transcendental entity (e.g. H.J. Schneider [12]), language is a very worldly phenomenon, albeit it triggers the issues of immateriality, immanence, and virtuality. Deleuze and Guattari provided us a smart and beautiful entry point into this field by their book “What is Philosophy?” [13] A nice account for a “Deleuzian” interpretation of the “brain” has recently been given by Lambert and Flaxman [14]. After all, I find it also almost beautiful that there is a deep link between the philosophical stances of Deleuze and Wittgenstein, despite the fact that Deleuze once called Wittgenstein’s philosophy a “catastrophe.”

Conceiving thinking and language as deterritorialized phenomena is equivalent with the insight that there can not be a single model about it. In much the same way it is not possible to “isolate” brains. There is no such thing as a single brain [15], not only in the case of humans. This applies to animals and machines as well. It is also not possible to isolate a single sentence in order to “analyze” it. Trying to do so is simply silly. If at all, a sentence in language is a phenomenon where the virtual is captured by the immanent. “As such,” a sentence is pure void.

Here is the perfect place to remember to Stanislav Lem and his famous novels and short stories. In one of the pieces, “Personetics,”[16] he shows that simulated beings need to be simulated in a group to develop and evolve a language on their own.

We can not program language, language understanding, or symbolic representations of texts, then calling it knowledge, by following some utopian specification. Yet, it is a fact that we as humans think also by means of our body, i.e. the body is a necessary condition for it. The basic models spring out from the associativity of our material arrangement: you may call it brain, nervous system, central processing unit, self-organizing map, etc. Of course, we should not commit the mistake of representationalism here, asking about the “wiring” or data structures. The processing of information in the brain is first a probabilistic affair, the capability to deal with propositional structures being only a consequence, among other factors, also of language.

The aspect of corporeality is not limited to biological matter that we use to call “body”. Our biological body is just one particular means to provide self-sustaining complexity and persistence. Even in the most abstract regions we find habits that could be said to form a “body of thought,” blurring the categorial difference between the material and the immaterial.

Thus, before starting to program the processing of “natural languages” we have to answer two rather important questions, which will help us avoiding the main obstacles.

What then are the abstract conditions for being a language being?

How to arrange the proposal of such conditions?

Before we will head over to the chapter about the penultimate conditions of language, thinking and knowing (LTK), we may collect our achievements (also from some other chapters) here in a short list:

- – LTK is a deterritorialized phenomenon;

- – LTK is based on the dialectics induced and established by the contrast between a community and an individual;

- – epistemologically, the only way to establish a contact to the world as well as to the brain (both are complex entities) is through modeling;

- – as an activity, LTK implies virtuality;

- – as a phenomenon, LTK implies planes of immanence;

- – as a performance, LTK implies impredicativity, vagueness and processuality that result in a metaphoricological structure;

- – models are used as source for equivalence classes (but see our objections against the set theoretic approach), but this association is fluid and it can not be determined in a unique, singular, or stable manner;

- – models can neither contain the conditions of their applicability, or of their application, nor of their symbolic or referential setup.

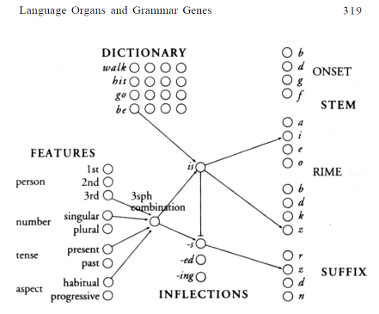

It seems that the conditions for the trias of language, thinking and knowledge can not be formulated in a positive definite manner. It is not possible in principle to formulate “the conditions for language are such and such.” This would again introduce a territorializing stance, which in turn would introduce a self-contradiction to our thought. Pinker got trapped by this misunderstanding throughout his book about the proclaimed language instinct [17], actually even invoking some sort of “language cybernetics” (e.g. p.303, 319). In the following chart, reproduced from his book [17], the circles indeed are meant to represent neurons, or identifiable groups of neurons. One can see the excitatory and inhibitory relations that refer to cybernetic mindset. (Pinker just forgot to draw the homunculus itself…)

This in turn lets us conclude that it is even not possible to give a positive definition for language, thinking or knowledge itself. We strongly believe that even modest materialism is dead: neither political materialism nor scientific positivism have been able to keep their central promise of providing “stable grounds.” Quite likely, “stable grounds”, or even “foundations” is an inappropriate goal in itself [18].

Actually, from a different perspective the insight that there is a “clear barrier to analyzing knowledge” [19] receives more and more support since Gettier has posed his problem [20], according to which knowledge can’t be conceived as justified true belief. The problem in the view that Gettier attacked is precisely equivalent to the claim that there is a private language. We may build a series of related claims: No private language, no positivism in epistemology, no criteria-based justification. Hence, no quarrels about values.

Programming the capability, and hence the conditions, for “language understanding” is probably more easy than it seems at first sight. Reluctance regarding the explicit control is likely a preferable strategy. Actually, there is almost nothing to “program” besides an appropriately growing system of growing self-organizing maps (for our notion of “growth” see this or this). As we have seen, the conditions for a “languagability” are outside of “processing” language.

- [1] John Searle

- [2] Wilhelm Vossenkuhl

- [3] Jerry Fodor

- [4] Marvin Minsky

- [5] Michel Foucault

- [6] Colin Johnston, Tractarian objects and logical categories. Synthese (2009) 167: 145-161. available here in an annotated version

- [7] Williams

- [8] Irene Pepperberg

- [9] Ansgar Beckermann

- [10] Gilles Deleuze, Félix Guattari, Mille Plateaus. 1978.

- [11] Gilles Deleuze, Difference and Repetition.

- [12] Hans-Jörg Schneider

- [13] Gilles Deleuze, Félix Guattari, What is Philosophy?

- [14] Gregg Lambert, Gregory Flaxman (2000), Five Propositions on the Brain. Journal of Neuro-Aesthetic Theory #2 (2000-02), available online.

- [15] Peter Sloterdijk. Sphären II. p.4.

- [16] Stanislav Lem, Personetics. reprinted in: Douglas Hofstadter (ed.), The Minds I.

- [17] Steven Pinker, The Language Instinct. 1995.

- [18] Wilfrid Sellars, Does Empirical Knowledge have a Foundation? in: H. Feigl and M. Scriven (eds.), The Foundations of Science and the Concepts of Psychology and Psychoanalysis. Minnesota Studies in the Philosophy of Science, vol. I. University of Minnesota Press, Minneapolis 1956. pp. 293-300. available online.

- [19] John L. Pollock, Joseph Cruz, Contemporary theories of knowledge. Rowman & Littlefield Publishers, Lanham 1999. pp. 13–14.

- [20] Edmund Gettier (1963), Is Justified True Belief Knowledge?, Analysis 23: 121-123.

۞

Leave a comment